Introduction to Emmi Framework¶

Main Components¶

Warning

The module layout is still evolving. This page describes the current components and may change in future releases.

Emmi Framework is organized into the following Python modules:

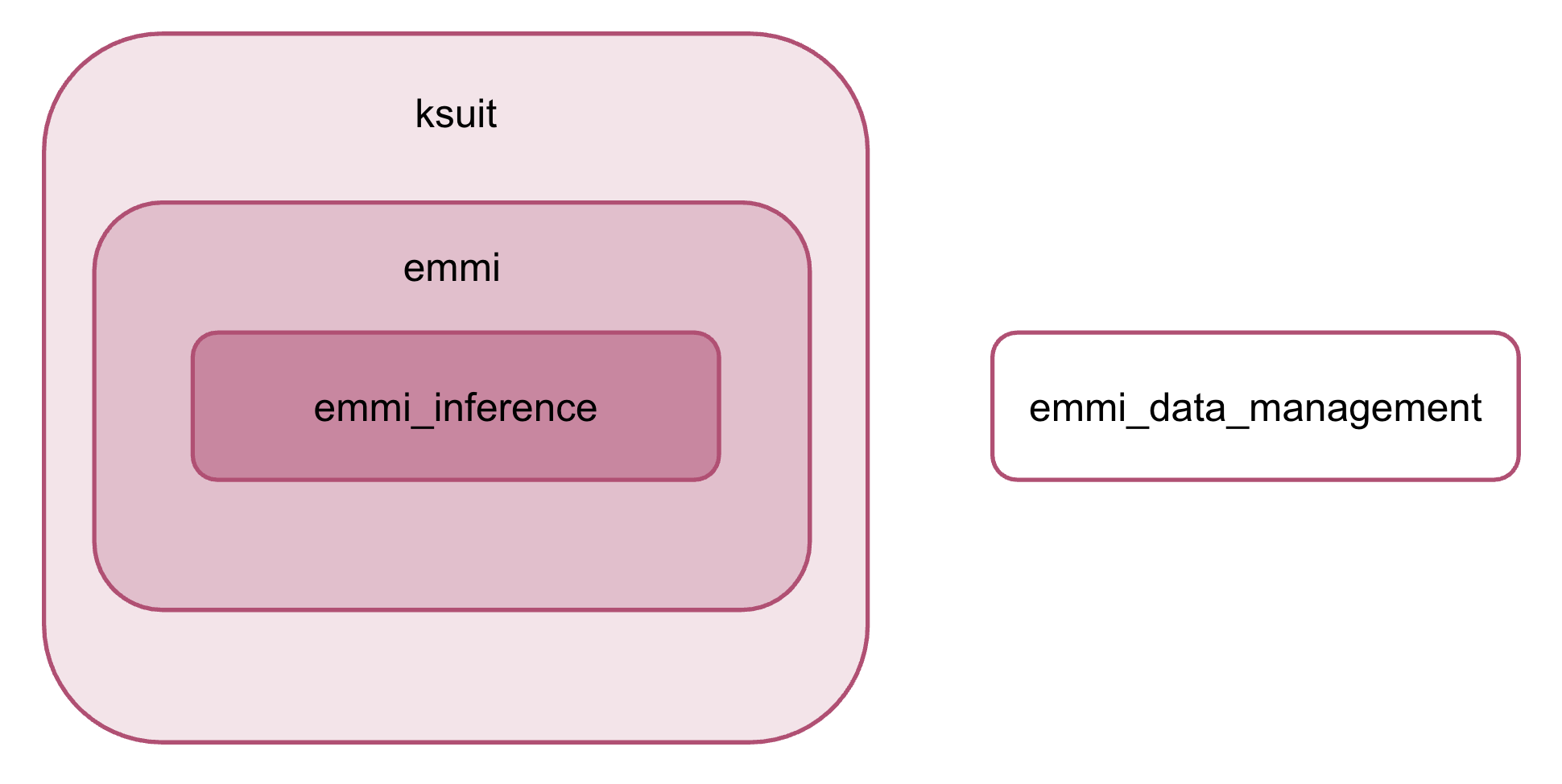

emmi- high-level ML and data modulesemmi_data_management- data fetching and storage utilitiesemmi_inference- inference toolsksuit- low-level ML codebase responsible for the heavy-lifting of this framework

Core layering of modules¶

How Do They Interact¶

The interaction between existing modules is better described using a typical workflow. Let’s say, a user wants to train a model, like AB-UPT, to do so they have two options:

Use configuration files to set up an experiment

Use code and tailor it to specific needs

In either case, the same underlying shared codebase is used to ensure consistent behavior.

Our main buildings blocks are located in ksuit (think of it as a core package). It takes care of things like

object factories, collators, trainers, runners, trackers, etc. All of which have Base classes that can be used as

abstract classes to create custom variations, as well as ready-to-use implementations with clearly defined usage

patterns.

To account for various levels of expertise (e.g. a seasoned ML engineer, a PhD student, a CFD expert, etc.) we provide

multiple abstraction levels. The high-level emmi module gives a list of convenient and

frequently used blocks to get things going. It fully relies on ksuit and is ready to be extended with your custom

logic when necessary. In most cases it is recommended to extend emmi first rather than diving directly into

ksuit.

Data is at the core of any machine learning workflow and we offer tools relevant to data fetching as part

of the emmi_data_management. Currently it supports data fetching and validation from HuggingFace and AWS S3.

By sharing feedback about your preferred way of storing and accessing data, you can help us prioritize future features.

This module doesn’t depend on any of the other modules and can be used standalone and/or part of other modules when

needed.

The inference engine offers the flexibility of running inference using arbitrary models via CLI or code. It’s worth

mentioning a necessity of registering custom models prior to using the CLI. emmi_inference relies on emmi

mainly to have an access to custom models, datasets, etc. The required CLI arguments simply converge to:

a config path the defines data, collators, model related execution parameters, and output settings;

a model type (must have a match the registry);

a checkpoint path.